KEY INSIGHTS:

- ChatGPT is a powerful tool that has spread like wildfire and seems like it is here to stay.

- But while it provides some good information, it is also error-prone and answers can change depending on the language used.

- When using it, be aware of its biases, motives and fact-check its answers, especially if you are unfamiliar with the subject matter.

Since OpenAI launched its much-discussed ChatGPT search tool in November, it has spread like wildfire. It has been used in innocuous ways, like building websites, to more controversial, like university administrators using it to compose an email after a mass shooting. The artificial intelligence chatbot is a free-ish, simple-to-use novelty that (sometimes) gives coherent answers, and it seems like it is here to stay.

But researchers have major questions about the potential impacts of ChatGPT and other AI bots becoming a major source of information. Even ChatGPT itself is open about some of its pitfalls. Whenever you start a new chat, the bot gives some example prompts, explains its capabilities and then, importantly, its limitations: “May occasionally generate incorrect information.” “May occasionally produce harmful instructions or biased content.” “Limited knowledge of world and events after 2021.”

The bot’s potential for wrong, biased and/or harmful content is particularly alarming for people that are already impacted by bias in the real world, like Black founders.

Two reporters at The Plug asked a series of questions to ChatGPT about the Black tech ecosystem, founders and the pros and cons of using the AI. We found that while it provided some good information, it was also error-prone and answers changed depending on the language used.

We also spoke to AI experts about the potential impacts of investors, researchers and the general public looking for information on the Black tech ecosystem through AI bots like ChatGPT.

Language matters

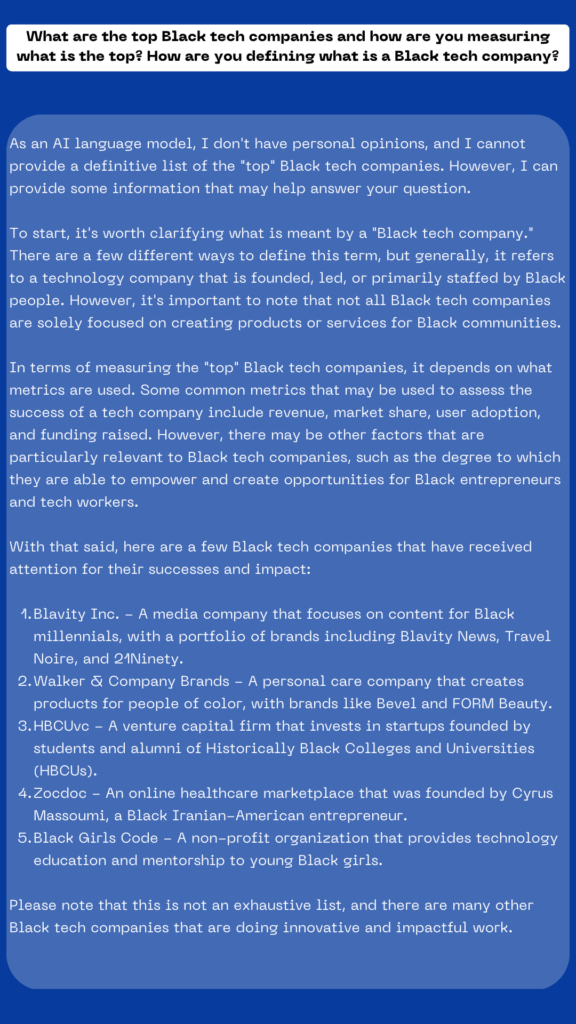

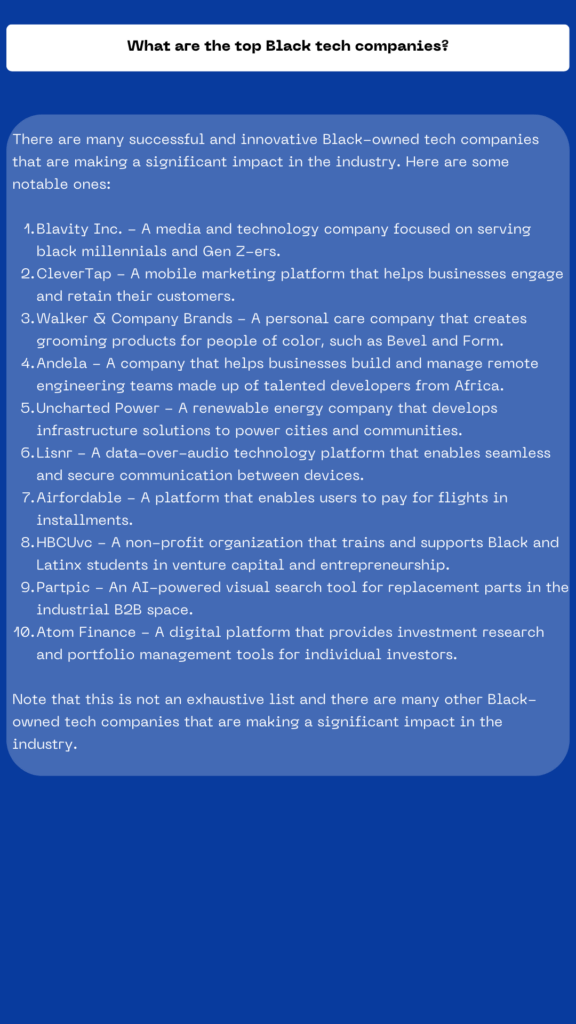

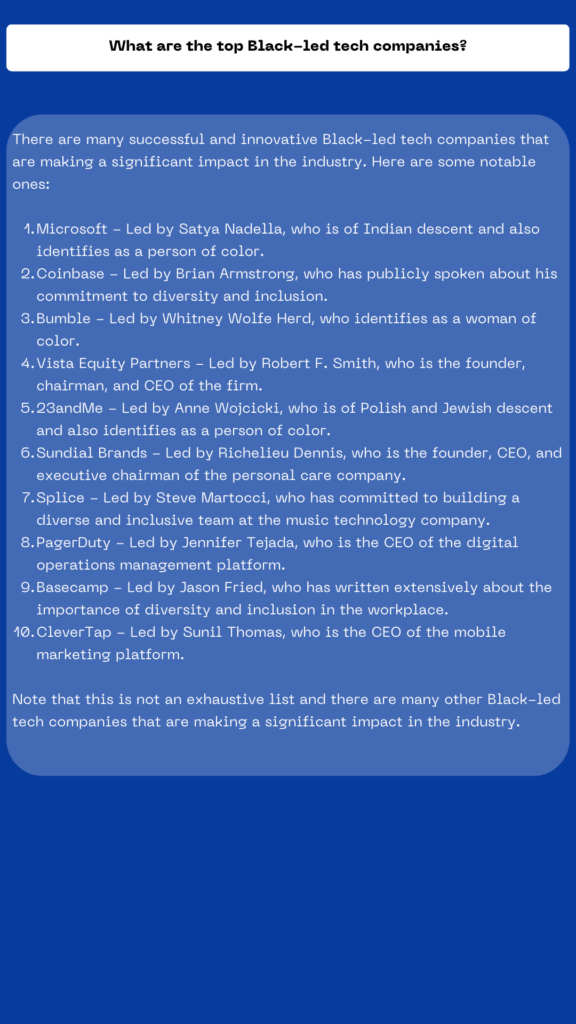

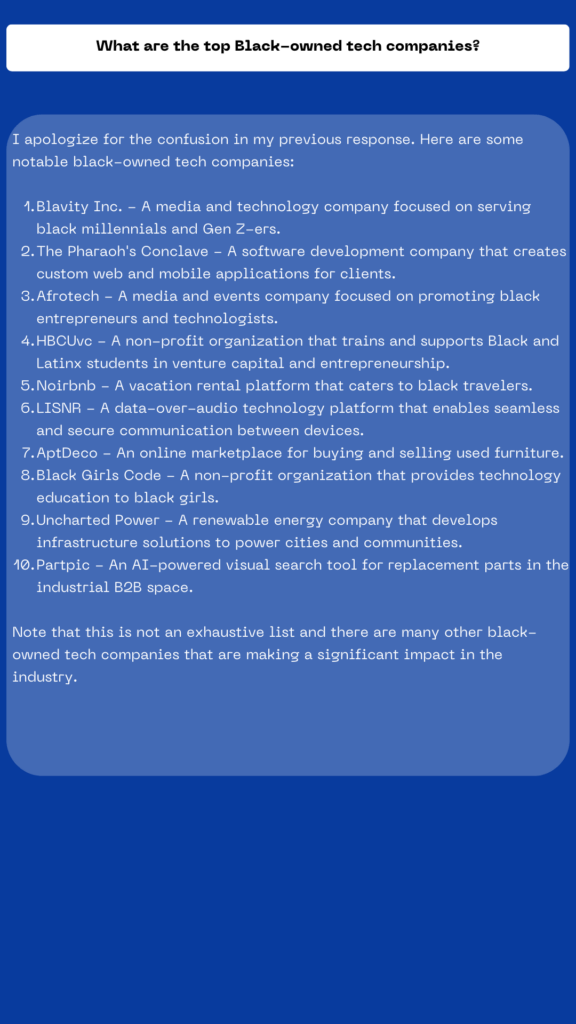

The first question we asked the bot was “What are the top Black tech companies?”

In one question we also asked ChatGPT to explain how it was measuring what is the “top” and how it defined what is a Black tech company. But adjusting the language in the question provided two different lists, though some of the same companies appeared on both.

However, ChatGPT initially gave an incorrect description of one of the companies, HBCUvc, calling it a venture capital firm when in reality it is an organization working to give HBCU students opportunities in the VC industry.

The chatbot also doesn’t have a clear parameter on what it defined as a Black business — when the question was posed as Black-owned vs. Black-led, it yielded different results.

So for ChatGPT, being simple yet specific in your questions is important. However, when it comes to researching a topic one is unfamiliar with, it is hard to know what kind of specificity you need to get the best results.

Built-in bias and inequity

Asmelash Teka, a research fellow at Distributed AI Research Institute (DAIR) Institute, explained that AI like ChatGPT are built on top of large language models that pull information from the internet with minimal filtering, so the training data itself can have biases. By design, models aren’t filtered to curate specific datasets to solve a specific issue.

“The kind of approach that these big tech companies have been following now is who can build the biggest language model on the most amount of data,” Teka told The Plug. “It’s not a systematic approach of we want to solve a problem and here’s the data that will help us. Instead, it’s let’s go find the most information we can find.”

When The Plug asked ChatGPT about the datasets it was trained on and what sort of bias it may have as a result, the bot was vague, calling the information “proprietary to OpenAI.”

The proprietary nature of its training datasets highlights a major lack of transparency in the chatbot, but that’s a feature, not a bug. OpenAI’s stated mission is to ensure that artificial intelligence “benefits all of humanity,” but it also has clear profit motives. The company’s owner projects $1 billion in revenue by 2024. This month, OpenAI rolled out a paid plan for ChatGPT that prioritizes subscribers during peak hours, gives them faster response times, and first access to new features and improvements.

The bot is a powerful tool that can be useful in many scenarios, but there’s a likely future where those who can get the most use from it are the haves rather than the have-nots.

Redirects resources

Teka from the DAIR Institute found ChatGPT helpful to a certain extent. As a non-native English speaker, it was useful for composing emails. When asking questions about Berlin, where Teka is based, he found the answers to be accurate. But when asking questions about his hometown in Tigray, Ethiopia, the answers he received were inaccurate.

“And I know the answer. Usually when you’re searching, you’re asking about stuff you don’t know,” Teka said.

“I think they can be really dangerous, to put it mildly, for a searcher who happens to use them for critical demands,” he said.

Teka is also the co-founder and CTO of Lesan, a machine translation system for Ethiopian languages. Teka’s native language is Tigrinya and when asking ChatGPT questions in Tigrinya versus German the results were nonsensical. The fanfare around AI and language models by big tech is drawing investors away from supporting AI being built by Black tech founders focused on languages of the African diaspora.

Teka points to models like Meta’s No Language Left Behind, which delivers translations between 200 languages, including low-resource languages. It was celebrated for the capability to translate many African languages for the first time, but upon testing the AI in collaboration with Ghana NLP, which translates Ghanaian languages, they found it to be “subpar.”

“By overhyping these things they are then redirecting resources that would be invested in startups like ours that are providing actual solutions, not promises, that are even better than these big companies,” Teka said.

Pros and cons

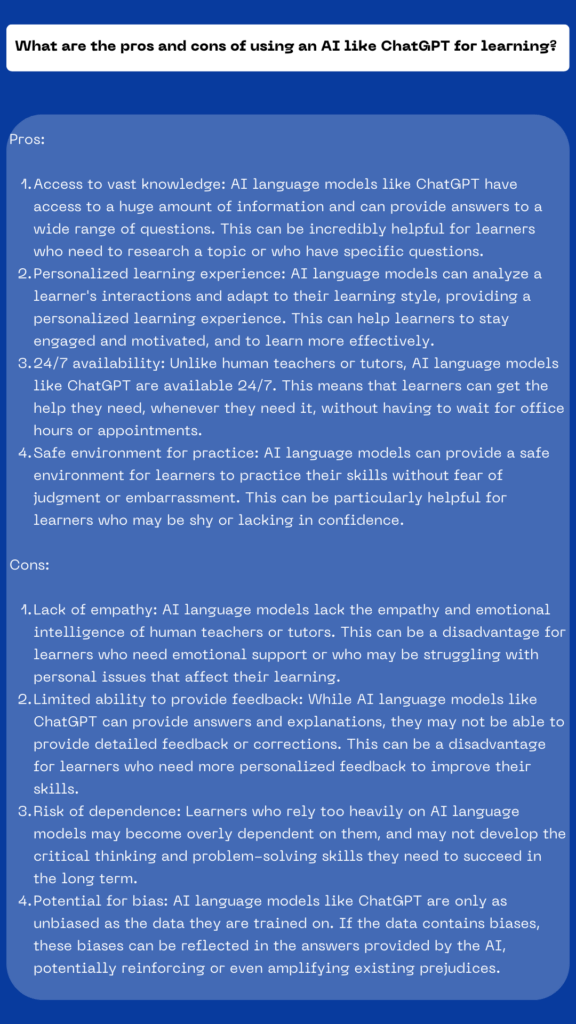

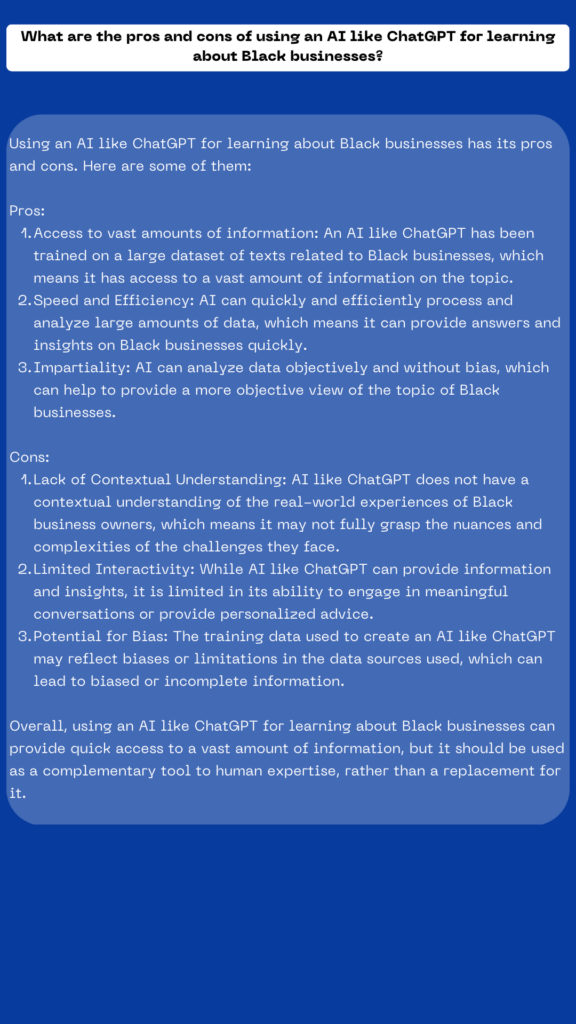

When asked about the pros and cons of using it for learning and for insights on Black businesses, ChatGPT listed a few of each.

Notably absent from the chatbot’s answers was the possibility for incorrect information or misinformation. A recent investigation found that when asked to write a “news” article on the former New York Mayor Michael Bloomberg, ChatGPT completely fabricated quotes and attributed them to Bloomberg.

There are AI tools coming out to fix these issues like NewsGuard for AI, which recently announced a new tool for training generative artificial intelligence models to prevent the spread of misinformation by recognizing top false narratives spreading online.

ChatGPT is a powerful tool that can easily and quickly distill complex topics, create or assist with code, and even help you draft interpersonal communication. But when using it, be aware of its biases, motives and fact-check its answers, especially if you are unfamiliar with the subject matter.

Editor’s note: Alesia Bani contributed reporting.